-

Retrieve Table Columns with Specific Keywords in PySpark SQL

The content outlines a process using PySpark SQL to identify columns with the keyword “id” in three specified tables: EMP1, DEPT1, and ALLOWANCE1. A Python script retrieves and filters these columns, creating a DataFrame that displays the tables along with their respective matching columns for easy viewing within a database… Read More ⇢

-

25 Key Generative AI (GenAI) Terms Explained Simply

GenAI is changing the game in industries like healthcare and content creation. Check out our guide to 25 must-know terms that explain its influence! Read More ⇢

-

Top 10 In-Demand IT Skills for 2025

The rapid advancement of technology has heightened the demand for skilled IT professionals. By 2025, key skills in demand will include Artificial Intelligence, Cybersecurity, Cloud Computing, Data Science, Blockchain, DevOps, IoT, AR/VR, Quantum Computing, and essential soft skills. Staying current with these competencies is crucial for career competitiveness. Read More ⇢

-

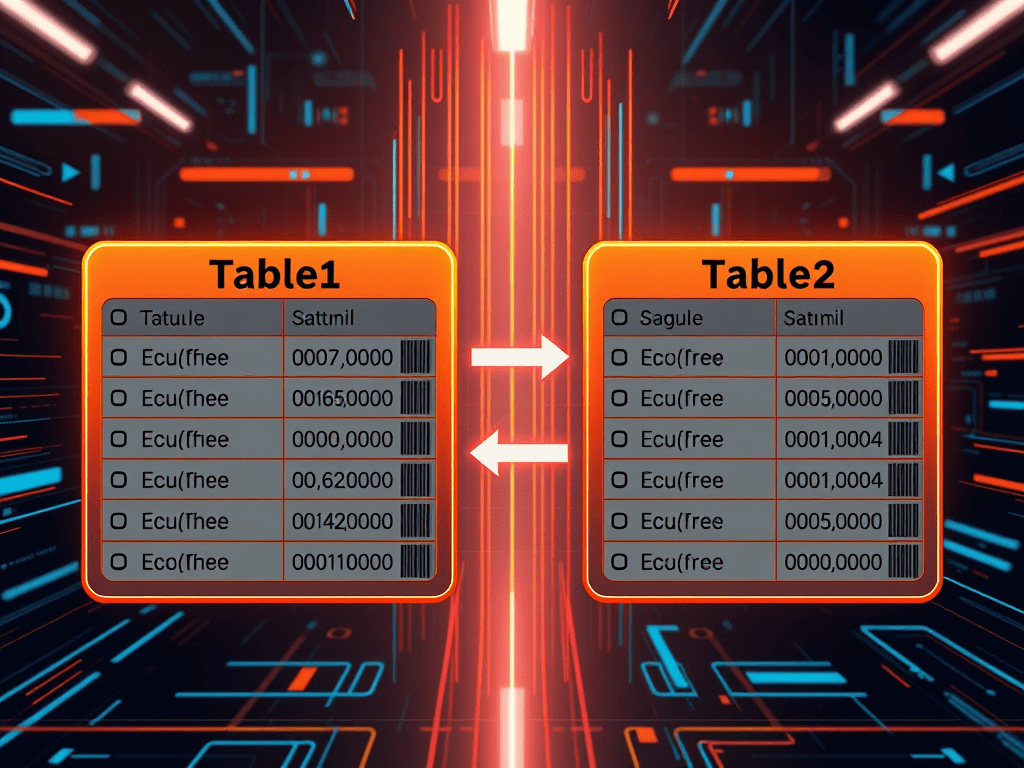

Calculate Match Percentage in PySpark SQL

The provided PySpark SQL query calculates the match percentage of a specific column (s_code) between two tables, Table1 and Table2. It uses Common Table Expressions (CTEs) to extract unique values, count matches, and compute the percentage based on total unique entries. The resulting output reflects the matching statistics. Read More ⇢

-

Celebrate 15 Years with Us!

We are excited to celebrate 15 years of delivering quality content and exceptional service! We invite you to join the celebration by liking and sharing our site. Your support has been invaluable, and we look forward to many more years of success together. Thank you for being part of our… Read More ⇢

-

A Comprehensive Guide to PySpark SQL Merge Query

The blog post discusses the MERGE statement in PySpark SQL, emphasizing its role in efficiently merging datasets, particularly in Delta tables. It explains how to conditionally update and insert data, outlines prerequisites, provides syntax and a practical example, and highlights common pitfalls and best practices for effective implementation in big… Read More ⇢

-

How to Set Up Kinesis Firehose in AWS: Step-by-Step Guide

Master Kinesis Firehose in AWS! Follow our expert guide for easy setup and seamless configuration. Start your stream journey today! Read More ⇢

-

Ingesting Data from Kinesis to Delta Live Tables

To ingest data from Amazon Kinesis into a Delta Live Tables Bronze layer, set up a streaming pipeline in Databricks. Configure AWS access, establish a Kinesis stream, and define a Bronze layer table using the readStream API. After processing, verify data and prepare for Silver and Gold layers, ensuring schema… Read More ⇢

-

Delta Live Tables vs Normal Data Pipelines

Databricks Delta Live Tables (DLT) offers a declarative framework that streamlines building production-grade pipelines with automated task management, data quality checks, and real-time monitoring, optimizing for Delta Lake. In contrast, normal data pipelines require manual orchestration and custom coding, providing flexibility but necessitating more maintenance and monitoring efforts. Read More ⇢