Use pip or apt-get to install BeautifulSoup in Python. Fix errors during installation by following commands provided here.

Install the BeautufulSoup parser in Linux python easily by giving the below commands.

Method:1

$ apt-get install python3-bs4 (for Python 3)Method:2

$ pip install beautifulsoup4Note: If you don’t have easy_install or pip installed

$ python setup.py install

How to Fix Syntax Error After Installation

Here it is about setup.py.

$ python3 setup.py install

or,

convert Python2 code to Python3 code

$ 2to3-3.2 -w bs4How to install lxml

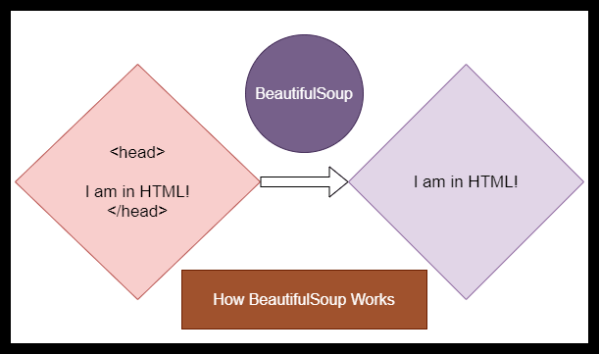

BeautifulSoup is a standard parser in Python3 for HTML tags. You can also download additional parser.

$ apt-get install python-lxml

or

$ easy_install lxml

or

$ pip install lxmlHow to Install html5lib

$ apt-get install python-html5lib

or

$ easy_install html5lib

or

$ pip install html5lib

How do I Remove HTML Tags in Web data

You have supplied two arguments for BeautifulSoup. One is fp and the other one is html.parser. Here, the parsing method is html.parser. You can also use xml.parser.

Python Code

from bs4 import BeautifulSoup

with open("index.html") as fp:

soup = BeautifulSoup(fp, 'html.parser')

soup = BeautifulSoup("<html>a web page</html>", 'html.parser')

print(BeautifulSoup("

<html>

<head>

</head>

<body>

<p>

Here's a paragraph of text!

</p>

<p>

Here's a second paragraph of text!

a</body>

</html>", "html.parser"))The Output

Here's a paragraph of text!

Here's a second paragraph of text!You May Also Like: BeautifulSoup Tutorial

Latest from the Blog

FAANG-Style SQL Interview Traps (And How to Avoid Them)

SQL interviews at FAANG (Facebook/Meta, Amazon, Apple, Netflix, Google) are not about syntax. They are designed to test logical thinking, edge cases, execution order, and data correctness at scale. Many strong candidates fail—not because they don’t know SQL, but because they fall into subtle traps. In this blog, we’ll walk through real FAANG-style SQL traps,…

Common Databricks Pipeline Errors, How to Fix Them, and Where to Optimize

Introduction Databricks has become a premier platform for data engineering, especially with its robust integration of Apache Spark and Delta Lake. However, even experienced data engineers encounter challenges when building and maintaining pipelines. In this blog post, we’ll explore common Databricks pipeline errors, provide practical fixes, and discuss performance optimization strategies to ensure your data…

AWS Interview Q&A for Beginners (Must Watch!)

The content outlines essential AWS basics interview questions that every beginner should be familiar with. It serves as a resource for fresh candidates preparing for interviews in cloud computing. The link provided leads to additional multimedia content related to the topic.

12 Top Python Coding Interview Questions

Useful for your next interview.

You must be logged in to post a comment.